How to go from Jupyter notebook to Production AI system

Let’s go over the few crucial steps when trying to build a real AI system people can use out of your precious AI demo.

Start fresh

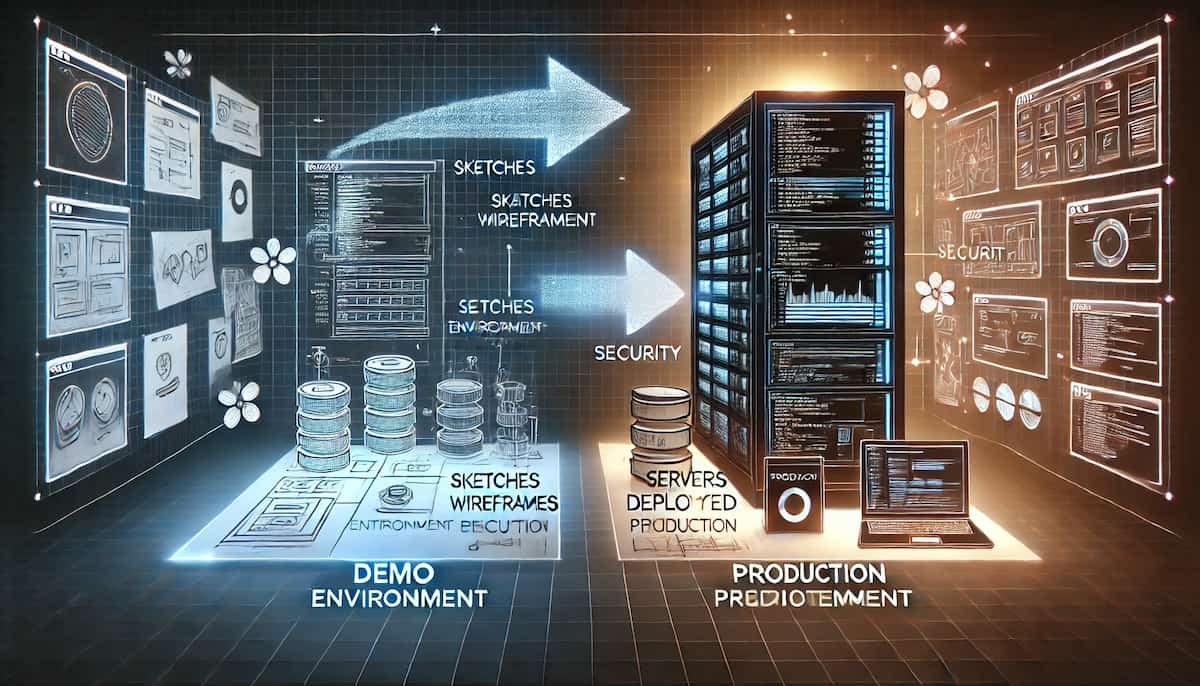

The journey from a proof-of-concept Jupyter notebook to a production-ready AI system begins with a crucial decision: starting fresh. While your prototype notebook served as an invaluable experimental ground, transforming it directly into production code often creates more challenges than solutions.

Here are just some of the challenges with the first prototype:

Testability: the protype code is almost never easy to test.

Modularity: demos never have proper classes and Object-oriented principles applied that allows your system to grow and develop with all the necessary bell and whistles.

Security is non-existent: API keys are likely thrown all over the place and secrets aren’t properly managed.

Performance: Demo code is almost never optimized to have it respond to multiple users and requests at the same time.

Database and ORM: I never use databases in my demos, it’s usually all in text files. In AI especially, it’s critical to have good memory management so the users’ context keeps getting better.

Pick a robust web framework

Just pick a framework and move forward with it. For Python my choice is FastAPI, for Typescript folks I think Nest.js is becoming the most stable choice with the most batteries included. Meaning it has modular design, dependency injection, and you’re not required to pick a ton of additional libraries.

At the end of the day, pick the thing you’re most comfortable and most productive with.

But in my experience using a micro framework that forces you to pick 10 additional libraries just to get a basic web app running is not worth it.

Setup logging and observability

For web app logging Sentry is a popular choice that works with almost all frameworks. Just set it up in 5 minutes and be done with it, it’s easy to replace if needed.

But more importantly for us is LLM observability. These are the top choices, none of them is that much better than the rest:

LangSmith: the cousin of LangChain, they’re made to work together. Managed service with free option.

Arize AI: Self hosted or managed. The managed has a free plan also.

Weights and Biases Weave: Free and paid plans for the hosted service. You can also host your own.

Pick a managed database

Do yourself a favor and pick a managed database, you don’t want that responsibility.

Your app can crash, and you can restart it, but if you try to manage your own db and mess it up, it’s a problem.

Everyone is thinking about vector databases when we talk about GenAI, but you need to store other regular things as well. So a good choice is to use Postgres with pgvector and combine embeddings with other tables that your web app might need. But there are other specialized vector databases that might have some advantages over Postgres and pgvector. Do your own research on that

Design patterns for implementation

Let’s get to the code. Here are 5 essential design patterns that will make your life easier when building your Gen AI apps for production.

1. Repository Pattern

Example: Separate classes for model storage, allowing you to change how models are stored without changing how they’re used

Abstracts data storage/retrieval logic from business logic.

Makes it easy to switch between different storage solutions (e.g., switching from MongoDB to PostgreSQL). This is already done if you’re using an ORM with your framework.

2. Factory Pattern

- Centralizes object creation logic

- Particularly useful for creating different versions of AI models or preprocessors

- Example: A ModelFactory that handles creation of different model versions, with proper resource management

3. Strategy Pattern

- Enables swapping algorithms/approaches at runtime

- Perfect for A/B testing different model versions or preprocessing strategies

- Example: Different tokenization strategies that can be swapped without changing the rest of the pipeline

4. Circuit Breaker Pattern

- Prevents system overload by failing fast when problems occur

- Crucial for AI systems that depend on external services or have compute-intensive operations

- Example: Automatically stopping new predictions if error rate exceeds threshold or if GPU memory is near capacity

5. Observer Pattern

- Implements a subscription mechanism for monitoring system events

- Excellent for monitoring model performance, logging predictions, and tracking system health

- Example: Observers that track model latency, accuracy, and resource usage without cluttering core prediction logic